There’s a new automated propaganda machine driving global politics. How it works and what it will mean for the future of democracy.

“This is a propaganda machine. It’s targeting people individually to recruit them to an idea. It’s a level of social engineering that I’ve never seen before. They’re capturing people and then keeping them on an emotional leash and never letting them go,” said professor Jonathan Albright.

Albright, an assistant professor and data scientist at Elon University, started digging into fake news sites after Donald Trump was elected president. Through extensive research and interviews with Albright and other key experts in the field, including Samuel Woolley, Head of Research at Oxford University’s Computational Propaganda Project, and Martin Moore, Director of the Centre for the Study of Media, Communication and Power at Kings College, it became clear to Scout that this phenomenon was about much more than just a few fake news stories. It was a piece of a much bigger and darker puzzle — a Weaponized AI Propaganda Machine being used to manipulate our opinions and behavior to advance specific political agendas.

By leveraging automated emotional manipulation alongside swarms of bots, Facebook dark posts, A/B testing, and fake news networks, a company called Cambridge Analytica has activated an invisible machine that preys on the personalities of individual voters to create large shifts in public opinion. Many of these technologies have been used individually to some effect before, but together they make up a nearly impenetrable voter manipulation machine that is quickly becoming the new deciding factor in elections around the world.

Most recently, Analytica helped elect U.S. President Donald Trump, secured a win for the Brexit Leave campaign, and led Ted Cruz’s 2016 campaign surge, shepherding him from the back of the GOP primary pack to the front.

Presumably because of its alliances, Analytica has declined to work on any democratic campaigns — at least in the U.S. It is, however, in talks to help Trump manage public opinion around his presidential policies and to expand sales for the Trump Organization. Cambridge Analytica is now expanding aggressively into U.S. commercial markets and is also meeting with right-wing parties and governments in Europe, Asia, and Latin America.

The company is owned and controlled by conservative and alt-right interests that are also deeply entwined in the Trump administration. The Mercer family is both a major owner of Cambridge Analytica and one of Trump’s biggest donors. Steve Bannon, in addition to acting as Trump’s Chief Strategist and a member of the White House Security Council, is a Cambridge Analytica board member. Until recently, Analytica’s CTO was the acting CTO at the Republican National Convention.

Cambridge Analytica isn’t the only company that could pull this off — but it is the most powerful right now. Understanding Cambridge Analytica and the bigger AI Propaganda Machine is essential for anyone who wants to understand modern political power, build a movement, or keep from being manipulated. The Weaponized AI Propaganda Machine it represents has become the new prerequisite for political success in a world of polarization, isolation, trolls, and dark posts.

There’s been a wave of reporting on Cambridge Analytica itself and solid coverage of individual aspects of the machine — bots, fake news, microtargeting — but none so far (that we have seen) that portrays the intense collective power of these technologies or the frightening level of influence they’re likely to have on future elections.

In the past, political messaging and propaganda battles were arms races to weaponize narrative through new mediums — waged in print, on the radio, and on TV. This new wave has brought the world something exponentially more insidious — personalized, adaptive, and ultimately addictive propaganda. Silicon Valley spent the last ten years building platforms whose natural end state is digital addiction. In 2016, Trump and his allies hijacked them.

We have entered a new political age. At Scout, we believe that the future of constructive, civic dialogue and free and open elections depends on our ability to understand and anticipate it.

Welcome to the age of Weaponized AI Propaganda.

Part 1: Big Data Surveillance Meets Computational Psychology

Any company can aggregate and purchase big data, but Cambridge Analytica has developed a model to translate that data into a personality profile used to predict, then ultimately change your behavior. That model itself was developed by paying a Cambridge psychology professor to copy the groundbreaking original research of his colleague through questionable methods that violated Amazon’s Terms of Service.

Based on its origins, Cambridge Analytica appears ready to capture and buy whatever data it needs to accomplish its ends.

In 2013, Dr. Michal Kosinski, then a PhD. candidate at the University of Cambridge’s Psychometrics Center, released a groundbreaking study announcing a new model he and his colleagues had spent years developing.

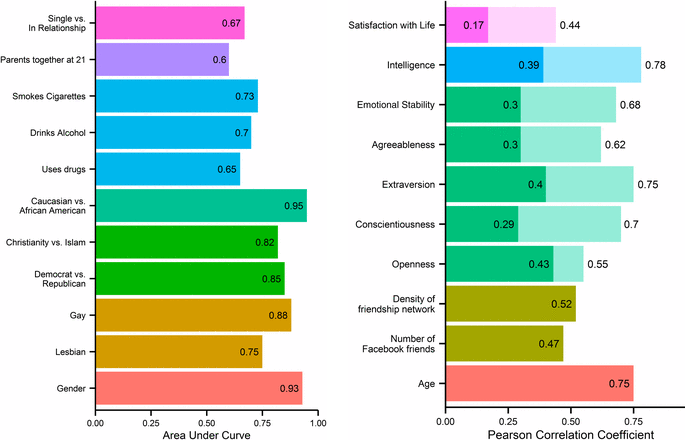

By correlating subjects’ Facebook Likes with their OCEAN scores — a standard-bearing personality questionnaire used by psychologists — the team was able to identify an individual’s gender, sexuality, political beliefs, and personality traits based only on what they had liked on Facebook.

Image Credit: Michal Kosinski, David Stillwell, and Thore Graepel

According to Zurich’s Das Magazine, which profiled Kosinski in late 2016, “with a mere ten ‘likes’ as input his model could appraise a person’s character better than an average coworker. With seventy, it could ‘know’ a subject better than a friend; with 150 likes, better than their parents.

With 300 likes, Kosinski’s machine could predict a subject’s behavior better than their partner. With even more likes it could exceed what a person thinks they know about themselves.”

Not long afterward, Kosinski was approached by Aleksandr Kogan, a fellow Cambridge professor in the psychology department, about licensing his model to SCL Elections, a company that claimed its specialty lay in manipulating elections. The offer would have meant a significant payout for Kosinki’s lab. Still, he declined, worried about the firm’s intentions and the downstream effects it could have.

It had taken Kosinski and his colleagues years to develop that model, but with his methods and findings now out in the world, there was little to stop SCL Elections from replicating them. It would seem they did just that.

According to a Guardian investigation, in early 2014, just a few months after Kosinski declined their offer, SCL partnered with Kogan instead. As a part of their relationship, Kogan paid Amazon Mechanical Turk workers $1 each to take the OCEAN quiz.

There was just one catch: To take the quiz, users were required to provide access to all of their Facebook data. They were told the data would be used for research.

The job was reported to Amazon for violating the platform’s Terms of Service. What many of the Turks likely didn’t realize: According to documents reviewed by The Guardian, “Kogan also captured the same data for each person’s unwitting friends.”

The data gathered from Kogan’s study went on to birth Cambridge Analytica, which spun out of SCL Elections soon after. The name, metaphorically at least, was a nod to Kogan’s work — and a dig at Kosinski.

But that early trove of user data was just the beginning — just the seed Analytica needed to build its own model for analyzing users personalities without having to rely on the lengthy OCEAN test.

After a successful proof of concept and backed by wealthy conservative investors, Analytica went on a data shopping spree for the ages, snapping up data about your shopping habits, land ownership, where you attend church, what stores you visit, what magazines you subscribe to — all of which is for sale from a range of data brokers and third party organizations selling information about you.

Analytica aggregated this data with voter roles, publicly available online data — including Facebook likes — and put it all into its predictive personality model.

Nix likes to boast that Analytica’s personality model has allowed it to create a personality profile for every adult in the U.S. — 220 million of them, each with up to 5,000 data points. And those profiles are being continually updated and improved the more data you spew out online.

Albright also believes that your Facebook and Twitter posts are being collected and integrated back into Cambridge Analytica’s personality profiles. “Twitter and also Facebook are being used to collect a lot of responsive data because people are impassioned, they reply, they retweet, but they also include basically their entire argument and their entire background on this topic,” Albright explains.

Part 2: Automated Engagement Scripts that Prey on Your Emotions

Collecting massive quantities of data about voters’ personalities might seem unsettling, but it’s actually not what sets Cambridge Analytica apart. For Analytica and other companies like them, it’s what they do with that data that really matters.

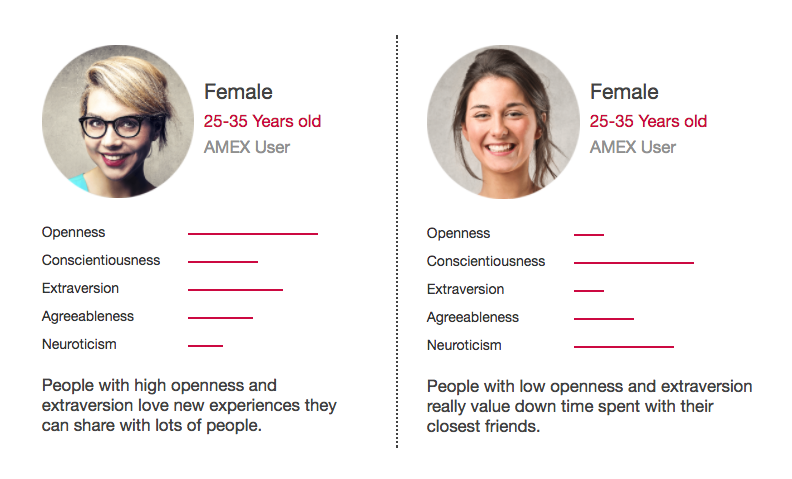

“Your behavior is driven by your personality and actually the more you can understand about people’s personality as psychological drivers, the more you can actually start to really tap in to why and how they make their decisions,” Nix explained to Bloomberg’s Sasha Issenburg. “We call this behavioral microtargeting and this is really our secret sauce, if you like. This is what we’re bringing to America.”

Image Credit: Cambridge Analytica

Using those dossiers, or psychographic profiles as Analytica calls them, Cambridge Analytica not only identifies which voters are most likely to swing for their causes or candidates; they use that information to predict and then change their future behavior.

As Vice reported recently, Kosinski and a colleague are now working on a new set of research, yet to be published, that addresses the effectiveness of these methods. Their early findings: Using personality targeting, Facebook posts can attract up to 63 percent more clicks and 1,400 more conversions.

Scout reached out to Cambridge Analytica with a detailed list of questions about their communications tactics, but the company declined to answer any questions or to comment on any of their tactics.

But researchers across the technology and media ecosystem who have been following Cambridge Analytica’s political messaging activities have unearthed an expansive, adaptive online network that automates the manipulation of voters at a scale never before seen in political messaging.

“They [the Trump campaign] were using 40-50,000 different variants of ad every day that were continuously measuring responses and then adapting and evolving based on that response,” Martin Moore, director of Kings College’s Centre for the Study of Media, Communication and Power, told The Guardian in early December. “It’s all done completely opaquely and they can spend as much money as they like on particular locations because you can focus on a five-mile radius.”

Where traditional pollsters might ask a person outright how they plan to vote, Analytica relies not on what they say but what they do, tracking their online movements and interests and serving up multivariate ads designed to change a person’s behavior by preying on individual personality traits.

“For example,” Nix wrote in an op-ed last year about Analytica’s work on the Cruz campaign, ”our issues model identified that there was a small pocket of voters in Iowa who felt strongly that citizens should be required by law to show photo ID at polling stations.”

“Leveraging our other data models, we were able to advise the campaign on how to approach this issue with specific individuals based on their unique profiles in order to use this relatively niche issue as a political pressure point to motivate them to go out and vote for Cruz. For people in the ‘Temperamental’ personality group, who tend to dislike commitment, messaging on the issue should take the line that showing your ID to vote is ‘as easy as buying a case of beer’. Whereas the right message for people in the ‘Stoic Traditionalist’ group, who have strongly held conventional views, is that showing your ID in order to vote is simply part of the privilege of living in a democracy.”

For Analytica, the feedback is instant and the response automated: Did this specific swing voter in Pennsylvania click on the ad attacking Clinton’s negligence over her email server? Yes? Serve her more content that emphasizes failures of personal responsibility. No? The automated script will try a different headline, perhaps one that plays on a different personality trait — say the voter’s tendency to be agreeable toward authority figures. Perhaps: “Top Intelligence Officials Agree: Clinton’s Emails Jeopardized National Security.”

Much of this is done through Facebook dark posts, which are only visible to those being targeted.

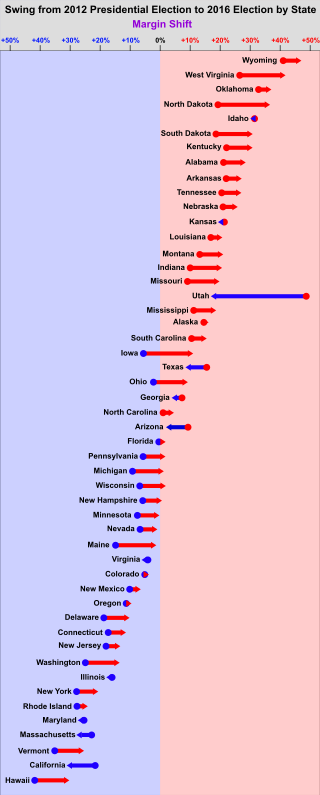

Based on users’ response to these posts, Cambridge Analytica was able to identify which of Trump’s messages were resonating and where. That information was also used to shape Trump’s campaign travel schedule. If 73 percent of targeted voters in Kent County, Mich. clicked on one of three articles about bringing back jobs? Schedule a Trump rally in Grand Rapids that focuses on economic recovery.

Political analysts in the Clinton campaign, who were basing their tactics on traditional polling methods, laughed when Trump scheduled campaign events in the so-called blue wall — a group of states that includes Michigan, Pennsylvania, and Wisconsin and has traditionally fallen to Democrats. But Cambridge Analytica saw they had an opening based on measured engagement with their Facebook posts. It was the small margins in Michigan, Pennsylvania and Wisconsin that won Trump the election.

Image Credit: Ali Zifan/Wikimedia Commons

Dark posts were also used to depress voter turnout among key groups of democratic voters. “In this election, dark posts were used to try to suppress the African-American vote,” wrote journalist and Open Society fellow McKenzie Funk in a New York Times editorial. “According to Bloomberg, the Trump campaign sent ads reminding certain selected black voters of Hillary Clinton’s infamous ‘super predator’ line. It targeted Miami’s Little Haiti neighborhood with messages about the Clinton Foundation’s troubles in Haiti after the 2010 earthquake.’”

Because dark posts are only visible to the targeted users, there’s no way for anyone outside of Analytica or the Trump campaign to track the content of these ads. In this case, there was no SEC oversight, no public scrutiny of Trump’s attack ads. Just the rapid-eye-movement of millions of individual users scanning their Facebook feeds.

In the weeks leading up to a final vote, a campaign could launch a $10-100 million dark post campaign targeting just a few million voters in swing districts and no one would know. This may be where future ‘black-swan’ election upsets are born.

“These companies,” Moore says, “have found a way of transgressing 150 years of legislation that we’ve developed to make elections fair and open.”

Part 3: A Propaganda Network to Accelerate Ideas in Minutes

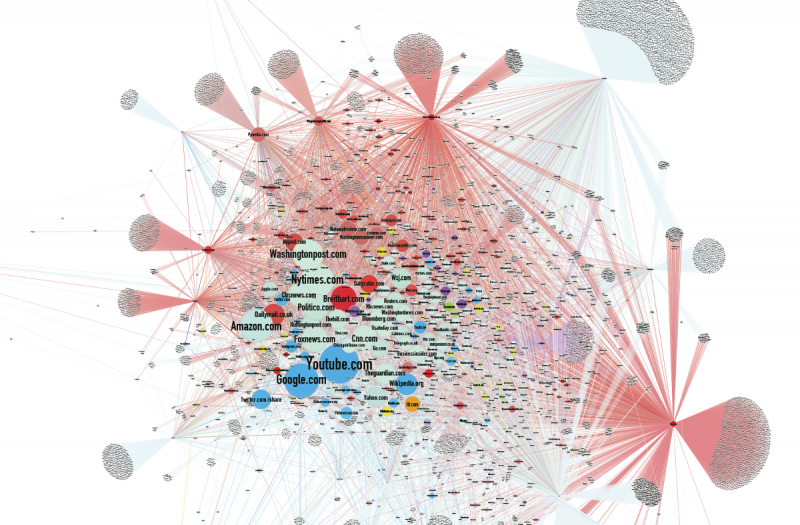

Meanwhile, surprised by the results of the 2016 presidential race, Albright started looking into the ‘fake news problem’. As a part of his research, Albright scraped 306 fake news sites to determine how exactly they were all connected to each other and the mainstream news ecosystem. What he found was unprecedented — a network of 23,000 pages and 1.3 million hyperlinks.

Image Credit: Jonathan Albright

“The sites in the fake news and hyper-biased #MCM network,” Albright writes, “have a very small ‘node’ size — this means they are linking out heavily to mainstream media, social networks, and informational resources (most of which are in the ‘center’ of the network), but not many sites in their peer group are sending links back.”

These sites aren’t owned or operated by any one individual entity, he says, but together they have been able to game Search Engine Optimization, increasing the visibility of fake and biased news anytime someone Googles an election-related term online — Trump, Clinton, Jews, Muslims, abortion, Obamacare.

“This network,” Albright wrote in a post exploring his findings, “is triggered on-demand to spread false, hyper-biased, and politically-loaded information.”

Even more shocking to him though was that this network of fake news creates a powerful infrastructure for companies like Cambridge Analytica to track voters and refine their personality targeting models

“I scraped the trackers on these sites and I was absolutely dumbfounded. Every time someone likes one of these posts on Facebook or visits one of these websites, the scripts are then following you around the web. And this enables data-mining and influencing companies like Cambridge Analytica to precisely target individuals, to follow them around the web, and to send them highly personalised political messages.”

The web of fake and biased news that Albright uncovered created a propaganda wave that Cambridge Analytica could ride and then amplify. The more fake news that users engage with, the more addictive Analytica’s personality engagement algorithms can become.

Voter 35423 clicked on a fake story about Hillary’s sex-trafficking ring? Let’s get her to engage with more stories about Hillary’s supposed history of murder and sex trafficking.

The synergy between fake-content networks, automated message testing, and personality profiling will rapidly spread to other digital mediums. Albright’s most-recent research focuses on an artificial intelligence that automatically creates YouTube videos about news and current events. The AI, which reacts to trending topics on Facebook and Twitter, pairs images and subtitles with a computer generated voiceover. It spooled out nearly 80,000 videos through 19 different channels in just a few days.

Given its rapid development, the technology community needs to anticipate how AI propaganda will soon be used for emotional manipulation in mobile messaging, virtual reality, and augmented reality.

Part 4: A Bot Gestapo to Police Public Debate

If fake news created the scaffolding for this new automated political propaganda machine, bots, or fake social media profiles, have become its foot soldiers — an army of political robots used to control conversations on social media and silence and intimidate journalists and others who might undermine their messaging.

Samuel Woolley, Director of Research at the University of Oxford’s Computational Propaganda Project and a fellow at Google’s Jigsaw project, has dedicated his career to studying the role of bots in online political organizing — who creates them, how they’re used, and to what end.

Research by Woolley and his Oxford-based team in the lead-up to the 2016 election found that pro-Trump political messaging relied heavily on bots to spread fake news and discredit Hillary Clinton. By election day, Trump’s bots outnumbered hers, 5:1.

“The use of automated accounts was deliberate and strategic throughout the election, most clearly with pro-Trump campaigners and programmers who carefully adjusted the timing of content production during the debates, strategically colonized pro-Clinton hashtags, and then disabled activities after Election Day,” the study by Woolley’s team reported.

There’s no way to know for sure whether Cambridge Analytica was responsible for subcontracting the creation of those Trump bots. “In Western democracies,” Woolley says, “bots have often been bought or built by subcontractors of main digital contractor teams because there is less necessity to report these deeper layers of campaign satellite workers to election commissions.”

But if anyone outside of the Trump campaign is qualified to speculate, it would be Woolley. Led by Dr. Philip Howard, the team’s Principal Investigator, Woolley and his colleagues have been tracking the use of bots in political organizing since 2010. That’s when Howard, buried deep in research about the role Twitter played in the Arab Spring, first noticed thousands of bots coopting hashtags used by protesters.

Curious, he and his team began reaching out to hackers, botmakers, and political campaigns, getting to know them and trying to understand their work and motivations. Eventually, those creators would come to make up an informal network of nearly 100 informants that have kept Howard and his colleagues in the know about these bots over the last few years.

Before long, Howard and his team were getting the heads up about bot propaganda campaigns from the creators themselves. As more and more major international political figures began using botnets as just another tool in their campaigns, Howard, Woolley and the rest of their team studied the action unfolding.

The world these informants revealed is an international network of governments, consultancies (often with owners or top management just one degree away from official government actors), and individuals who build and maintain massive networks of bots to amplify the messages of political actors, spread messages counter to those of their opponents, and silence those whose views or ideas might threaten those same political actors.

“The Chinese, Iranian, and Russian, governments employ their own social-media experts and pay small amounts of money to large numbers of people to generate pro-government messages,” Howard and his coauthors wrote in a 2015 research paper about the use of bots in the Venezuelan election.

Depending on which of those three categories bot creators fall into — government, consultancy or individual — they’re just as likely to be motivated by political beliefs as they are the opportunity to auction off their networks of digital influence to the highest bidder.

Not all bots are created equal. The average, run-of-the-mill Twitter bot is literally a robot — often programmed to retweet specific accounts to help popularize specific ideas or viewpoints. They also frequently respond automatically to Twitter users who use certain keywords or hashtags — often with pre-written slurs, insults or threats.

High-end bots on the other hand are more analog, operated by real people. They assume fake identities with distinct personalities and their responses to other users online are specific, intended to change their opinions or those of their followers by attacking their viewpoints. They have online friends and followers. They’re also far less likely to be discovered — and their accounts deactivated — by Facebook or Twitter.

Working on their own, Woolley estimates, an individual could build and maintain up to 400 of these boutique Twitter bots; on Facebook, which he says is more effective at identifying and shutting down fake accounts, an individual could manage 10-20.

As a result, these high-quality botnets are often used for multiple political campaigns. During the Brexit referendum, the Oxford team watched as one network of bots, previously used to influence the conversation around the Israeli/Palestinian conflict, was reactivated to fight for the Leave campaign. Individual profiles were updated to reflect the new debate, their personal taglines changed to ally with their new allegiances — and away they went.

Russia’s bot army has been the subject of particular scrutiny since a CIA special report revealed that Russia had been working to influence the election in Trump’s favor. Recently, reporter/comedian Samantha Bee traveled to Moscow to interview two paid Russian troll operators.

Clad in black ski masks to obscure their identities, the two talked with Bee about how and why they were using their accounts during the U.S. election. They told Bee that they pose as Americans online and target sites like The Wall Street Journal, The New York Post, The Washington Post, Facebook and Twitter. Their goal, they said, is to “piss off” other social media users, change their opinions, and silence their opponents.

Or, to put it in the words of Russian Troll #1, “when your opponent just … shut up.”

The Future of the Weaponized AI Propaganda Machine

The 2016 U.S. election is over, but the Weaponized AI Propaganda Machine is just warming up. And while each of its components would be worrying on its own, together, they represent the arrival of a new era in political messaging — a steel wall between campaign winners and losers that can only be mounted by gathering more data, creating better personality analyses, rapid development of engagement AI, and hiring more trolls.

At the moment, Trump and Cambridge Analytica are lapping their opponents. The more data they gather about individuals, the more Analytica and, by extension, Trump’s presidency will benefit from the network effects of their work — and the harder it will become to counter or fight back against their messaging in the court of public opinion.

Each Tweet that echoes forth from the @realDonaldTrump and @POTUS accounts, announcing and defending the administration’s moves, is met with a chorus of protest and argument. But even that negative engagement becomes a valuable asset for the Trump administration because every impulsive tweet can be treated like a psychographic experiment.

Trump’s first few weeks in office may have seemed bumbling, but they represent a clear signal of what lies ahead for Trump’s presidency — an executive order designed to enrage and distract his opponents as he moves to strip power from the judicial branch, installs Cambridge Analytica board member Steve Bannon on the National Security Council, and issues a series of unconstitutional gag orders to federal agencies.

It’s likely Cambridge Analytica will secure more contracts with federal agencies and is in the final stages of negotiations to begin managing White House digital communication throughout the Trump Administration. What new predictive-personality targeting becomes possible with potential access to data on U.S. voters from the IRS, Department of Homeland Security, or the NSA?

“Lenin wanted to destroy the state, and that’s my goal, too. I want to bring everything crashing down and destroy all of today’s establishment,” Bannon said in 2013. We know that Steve Bannon subscribes to a theory of history where a messianic ‘Grey Warrior’ consolidates power and remakes the global order. Bolstered by the success of Brexit and the Trump victory, Breitbart (of which Bannon was the Executive Chair until Trump’s election) and Cambridge Analytica (which Bannon sits on the board of) are now bringing fake news and automated propaganda to support far-right parties in at least Germany, France, Hungary, and India as well as parts of South America.

Never has such a radical, international political movement had the precision and power of this kind of propaganda technology. Whether or not leaders, engineers, designers, and investors in the technology community respond to this threat will shape major aspects of global politics for the foreseeable future.

The future of politics will not be a war of candidates or even cash on hand. And it’s not even about big data, as some have argued. Everyone will have access to big data — as Hillary did in the 2016 election.

From now on, the distinguishing factor between those who win elections and those who lose them will be how a candidate uses that data to refine their machine learning algorithms and automated engagement tactics. Elections in 2018 and 2020 won’t be a contest of ideas, but a battle of automated behavior change.

The fight for the future will be a proxy war of machine learning. It will be waged online, in secret, and with the unwitting help of all of you.

Anyone who wants to effect change needs to understand this new reality. It’s only by understanding this — and by building better automated engagement systems that amplify genuine human passion rather than manipulate it — that other candidates and causes around the globe will be able to compete.

At Scout, we’ve been speaking with political strategists, technologists, and machine learning experts about how AI propaganda will spread through society in the near future. We want to work with you, the Scout community, to scenario plan what happens next. Here are some implications to get the conversation started.

Implication #1:

Public Sentiment Turns Into High-Frequency Trading

Thanks to stock-trading algorithms, large portions of public stock and commodity markets no longer resemble a human system and, some would argue, no longer serve their purpose as a signal of value. Instead they’re a battleground for high-frequency trading algorithms attempting to influence price or find nano-leverage in price position.

In the near future, we may see a similar process unfold in our public debates. Instead of battling press conferences and opinion articles, public opinion about companies and politicians may turn into multi-billion dollar battles between competing algorithms, each deployed to sway public sentiment. Stock trading algorithms already exist that analyze millions of Tweets and online posts in real-time and make trades in a matter of milliseconds based on changes in public sentiment. Algorithmic trading and ‘algorithmic public opinion’ are already connected. It’s likely they will continue to converge.

Implication #2:

Personalized, Automated Propaganda That Adapts to Your Weaknesses

What if President Trump’s 2020 re-election campaign didn’t just have the best political messaging, but 250 million algorithmic versions of their political message all updating in real-time, personalized to precisely fit the worldview and attack the insecurities of their targets? Instead of having to deal with misleading politicians, we may soon witness a cambrian explosion of pathologically-lying political and corporate bots that constantly improve at manipulating us.

Implication #3:

Not Just a Bubble, But Trapped in Your Own Ideological Matrix

Imagine that in 2020 you found out that your favorite politics page or group on Facebook didn’t actually have any other human members, but was filled with dozens or hundreds of bots that made you feel at home and your opinions validated? Is it possible that you might never find out?

By Anderson and Brett Horvath, Illustration by Cody Fitzgerald

This article was originally published by “Scout“

The 21st Century

Please take note: Australia approximately 12 months after the GFC, removed legislation inhibiting our Media in using Propaganda. This of course can be verified. Please do, and if possible, provide a brief article that encompasses the fact. People need to be aware that they are being deceived. Thank you.

About 1 year after the GFC, Australia removed legislation prohibiting Propaganda by Main Stream Media in this country. Of course this may be confirmed by some investigation, as I personally read of these changes in a local rag. Please is possible, may I request a brief article confirming the above for your readers. Thank you.